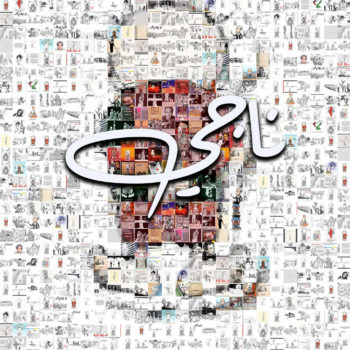

Arab Future Tripping VR Prototype

Above is the first rendering of a prototype of a data-driven virtual world of social media. This world here is made up of 60,000 users (trees) who tweeted about half a million posts (frogs, mushrooms, and deer) on #WomensMarch over 24 hours in 2017 (time indicated by scenes). Original score gifted by DJ Underbelly, “Kali Uchis Watch Want Me 4.”

Below is video documentation of new immersive world of live tweeting mixed with historical social media. The first shots are of connecting the 3D VJ Um Amel model (bone by bone) to the Kinect system, and live tweeting appearing in our VR world. The second scenes interact with a historical set of tweets on from January 21, 2017 on #WomensMarch. As the cyborg approaches tree stumps, the trees grow and the data appears. Built using Houdini, Unreel, and Kinect. These are prototypes for future development.

On her tenth anniversary in 2018, VJ Um Amel will release a second mediascape of the same social media archives that runs the R-Shief Media System as Part III of Storyboard of the Arab Resistance. But rather than software applications and data visualizations, Arab Future Tripping will embody skin or stitch holding together various immersive and performative modalities.

VJ Um Amel’s VR research cluster is made possible by UCSB Academic Senate Faculty Research Grant to support the prototyping and production of this 360 degree, first-person immersive, virtual reality (VR) story of the Arab Uprisings of 2011. The Twitter data is collected, processed, and produced b R-Shief and VR production using Unreel, Houdini, and Grasshopper. Thanks to all past, present, and future who contribute to this project.